What is Googlebot? Exploring Google’s Web Crawler

Hand off the toughest tasks in SEO, PPC, and content without compromising quality

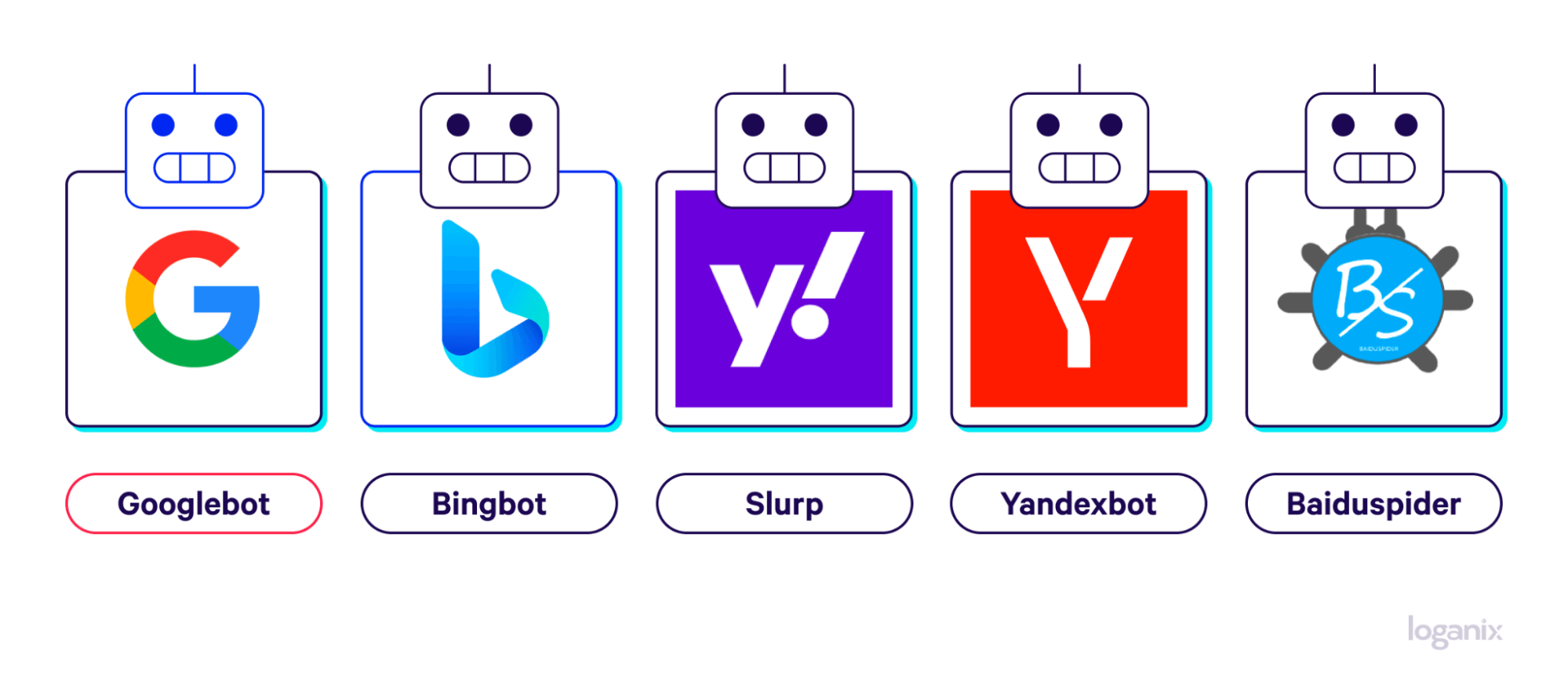

Explore ServicesGooglebot is a dominant force, surpassing Bingbot, Facebook’s crawler, and even Amazonbot in activity. In fact, it’s such a preeminent player in the web crawling space that it accounts for an estimated 28.5 percent of all bot hits.

It doesn’t stop there, though—impressively, during just a 12-month period, Googlebot was found to scan over 300 million URLs and no less than 6 billion log files, meticulously analyzing and understanding the pages and information it found.

So, like, why do these numbers matter to you?

The answer lies in the web crawler’s functionality. Its relentless crawling and indexing are the backbone of Google’s search engine, making it the gatekeeper of online content. In other words, if your website isn’t indexable and crawlable, good luck getting your content ranked or before the eyes of your target audience—no if, buts, or maybes.

So this isn’t your reality, let’s get you up to speed with Googlebot. In this guide, we’ll

- answer the question, “What is Googlebot?”

- explore its importance to search engine optimization (SEO),

- and dive into how you can optimize your site to make the most of this powerful web crawler.

What Is Googlebot?

Googlebot—also known as Google Spider or simply Google Crawler—is Google’s web crawler used to discover, crawl, and index new and updated pages on the web.

Its primary mission is twofold:

- Exploration of web pages: Googlebot scours the web, discovering new links to follow and web pages to discover. Its goal is to find and index as much content as possible, ensuring that Google’s search results are as comprehensive, helpful, and up-to-date as possible.

- Information gathering: As it traverses from page to page, Googlebot collects valuable information about each site it encounters. The collected data helps keep Google’s vast database current and accurate.

It’s also worth noting here that Googlebot is not a singular entity but a collection of programs run by Google. Operating from various servers worldwide, these crawlers work in unison to streamline the indexing process, covering as much of the web as possible.

In fact, there are 91 variations of Google crawlers and bots, each serving different purposes. The breakdown of Googlebot’s activity includes 8.5 percent for mobile search engine crawling, 5.8 percent for mobile advertising, 5.3 percent for mobile search engines, 4.3 percent for desktop search engines, and 2.3 percent for images.

Learn more: Interested in broadening your SEO knowledge even further? Check out our SEO glossary, where we’ve explained over 250+ terms.

The Importance of Optimizing For Googlebot

Now we have a rough idea of what Googlebot is, let’s delve into why optimizing for Googlebot is so integral to SEO and beyond:

1. Drives Organic Traffic

Without proper optimization for Googlebot, your website will forever be stuck in incognito mode. The importance of Googlebot in driving organic traffic can be illustrated through real-world examples:

Case Study 1: Saramin, one of Korea’s largest job platforms, recognized the importance of Googlebot and invested in fixing crawling errors and optimizing SEO. The result was impressive:

- 15 percent increase in organic traffic in the first year.

- 2x increase in organic search traffic overall.

- During the peak hiring season, traffic even doubled from the previous year’s.

Case Study 2: Alfa Aesar, a research chemicals company, faced challenges with SEO issues and duplicate indexed web pages. Through their efforts to address these problems and optimize for Googlebot, the company saw a 25 percent increase in revenue driven directly from organic traffic.

2. Optimizes Site Performance and User Experience

Googlebot’s ability to mimic various devices and assess how your site appears to different users ensures that your content is accessible and user-friendly across platforms. This not only enhances the user experience but also optimizes site performance.

To highlight this point, let’s look at a case study:

Case Study: TemplateMonster, a digital marketplace for website templates, faced a challenge with Googlebot discovering 3 million orphaned pages regularly. These orphaned pages were wasting their crawl budget and hindering SEO performance. By identifying and eliminating 250K unnecessary pages, they ushered in a new era of SEO successes:

- Significant improvement in crawl efficiency.

- Enhanced site performance and user experience.

- Positive impact on organic search rankings.

How to Control Googlebot?

There might be instances where you’d want to control how Googlebot interacts with your site. Here’s how you can achieve that:

1. Understand Googlebot Types

Googlebot comes in two primary flavors:

- Googlebot Desktop: This crawler simulates a user on a desktop.

- Googlebot Smartphone: This crawler simulates a user on a mobile device.

Both crawler types obey the same product token in robots.txt, a standard used by websites to communicate with web crawlers and other automated agents. The robots.txt file is a simple text file placed in a website’s HTML source code that tells web crawlers like Googlebot which pages or files the crawler can or can’t request from a site.

2. Monitor Googlebot Access

Googlebot is designed to access your site at a reasonable rate, typically not more than once every few seconds on average. However, Googlebot operates from multiple IP addresses, all bearing the Googlebot user agent. This design ensures efficient crawling without overwhelming your server. If you notice that your server is struggling with Googlebot’s requests, you can reduce the crawl rate.

3. Adjust Crawl Rate in Search Console

One recommended way to control Googlebot’s crawl rate is through the Google Search Console. After verifying your site ownership, you can adjust the crawl rate to a level that suits your site’s needs. However, be cautious not to set it too low, as it might affect how Google perceives and indexes your content.

4. Automatic Crawl Rate Reduction

If there’s an urgent need to temporarily reduce Googlebot’s crawl rate, you can return an error page with a 500, 503, or 429 HTTP response status code. Googlebot will automatically reduce the crawl rate upon encountering a significant number of these errors. Once the errors decrease, the crawl rate will gradually increase.

5. Stay Updated

Google provides a list of IP address blocks used by Googlebot in JSON format. Keeping an eye on this list ensures you’re always aware of genuine Googlebot activities.

Conclusion and Next Steps

Our team of SEO experts is well-versed in the intricacies of Googlebot and other search engine algorithms. We offer tailored solutions to ensure that your website is not only discoverable by Googlebot but also optimized for performance and user experience.

🚀 Ready to take the next step? Contact Loganix today and let us help you harness the power of Googlebot to elevate your website to new heights. 🚀

Hand off the toughest tasks in SEO, PPC, and content without compromising quality

Explore ServicesWritten by Aaron Haynes on January 6, 2024

CEO and partner at Loganix, I believe in taking what you do best and sharing it with the world in the most transparent and powerful way possible. If I am not running the business, I am neck deep in client SEO.