What Is Spamdexing? SEO Temptations & Pitfalls to Avoid

Hand off the toughest tasks in SEO, PPC, and content without compromising quality

Explore ServicesIn the high-stakes game of search engine optimization (SEO), spamdexing remains a tempting shortcut, promising quick wins. However, as Google continues to refine its search algorithms and the tiniest misstep may result in the tanking of organic traffic, is employing black hat SEO practices worth the risk?

To answer this question and more, here, we

- answer the pressing question, “What is spamdexing,”

- detail the tactics used in SEO spam,

- and break down how you can avoid the potentially punishable tactics that may harm your SEO efforts.

What Is Spamdexing?

The word spamdexing, a portmanteau of “spam” and “indexing,” speaks for itself. It encompasses the black hat SEO practices used in search engine spamming—a suite of tactics used in isolation or collectively to manipulate search engine indexes, unfairly boosting a website’s visibility in search results.

Spamdexing is considered unethical as the tactics used compromise the integrity of search results, leading to invaluable or irrelevant content to rank on the search engine results pages (SERPs). Google is in the business of providing the most seamless and frictionless user experience possible and fortifying its 90 percent+ market share, so ranking content that isn’t helpful to its users goes against its business model and everything the company strives to achieve.

Learn more: Interested in broadening your SEO knowledge even further? Check out our SEO glossary, where we’ve explained over 250+ terms.

The Inception of Spamdexing

The roots of spamdexing can be traced back to the early days of the internet—a time when search engine algorithms weren’t as sophisticated as they are today. A lack of sophistication left loopholes that could be exploited by savvy webmasters possessing a succeed-at-all-costs mentality.

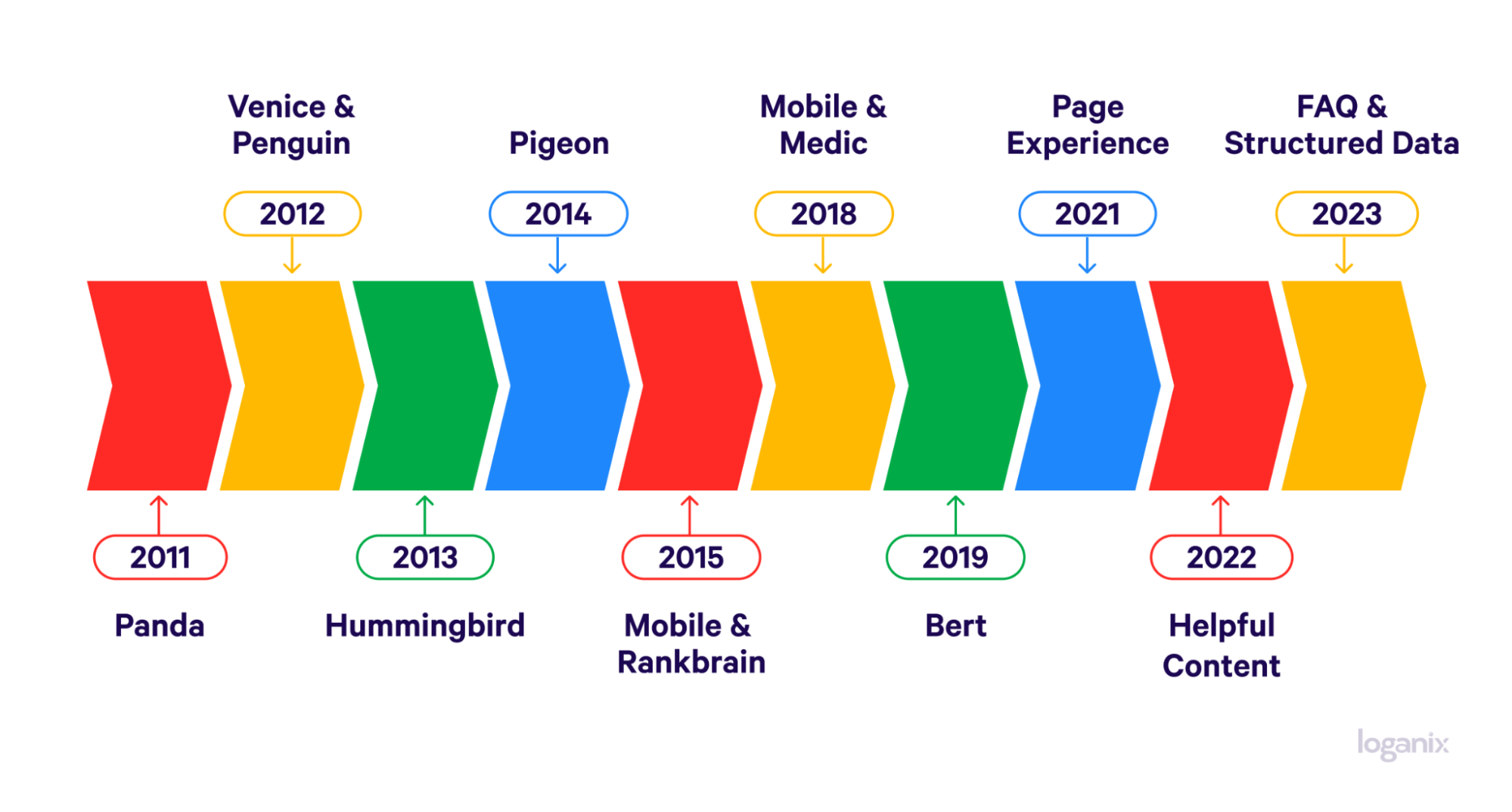

As search engines, particularly Google, became aware of these manipulative tactics, they refined their search algorithms to detect and counteract spamdexing, adjusting search results to ensure fairness and, in cases of severe violations, imposing penalties on the offending websites—algorithmic penalties or the dreaded manual actions.

Naturally, black and grey hat SEO practitioners wouldn’t take this thing lying down, continually refining their tactics to outsmart Google’s evolving algorithms, putting the ball back into Google’s court.

Google refines, spamdexing tactics evolved. Google refines, tactics evolve. This perpetual dance between Google and those attempting to manipulate search results has resulted in an ongoing cat-and-mouse game, with each side constantly adjusting their strategies in a bid to stay one step ahead.

Spamdexing in a Modern Context

Today, Google’s spam policies are stringent, and the search engine is exceptionally proactive—some critics argue, perhaps overly so—in regularly updating its algorithms to identify and counteract spamdexing efforts. As we touched on, Google’s algorithm updates aim to strengthen the user experience and demote or remove content that violates Google’s guidelines.

To discourage webmasters from trying their hand at spamdexing, like in the earlier days of search engine spamming, manipulative tactics still carry significant risks. However, manual actions aren’t as frequent, although algorithmic penalties certainly are. The reason for this is likely to do with resources. As Google’s index has grown to an enormous 100,000,000 gigabytes of data, the company increasingly relies on automated systems and less on human intervention.

Ultimately, the consequences are the same: a webmaster using spamdexing tactics will likely be found out, their website penalized, and no organic traffic will be directed their way as a result. This has made spamdexing a high-risk, low-reward strategy in today’s digital landscape.

How Does Spamdexing Work?

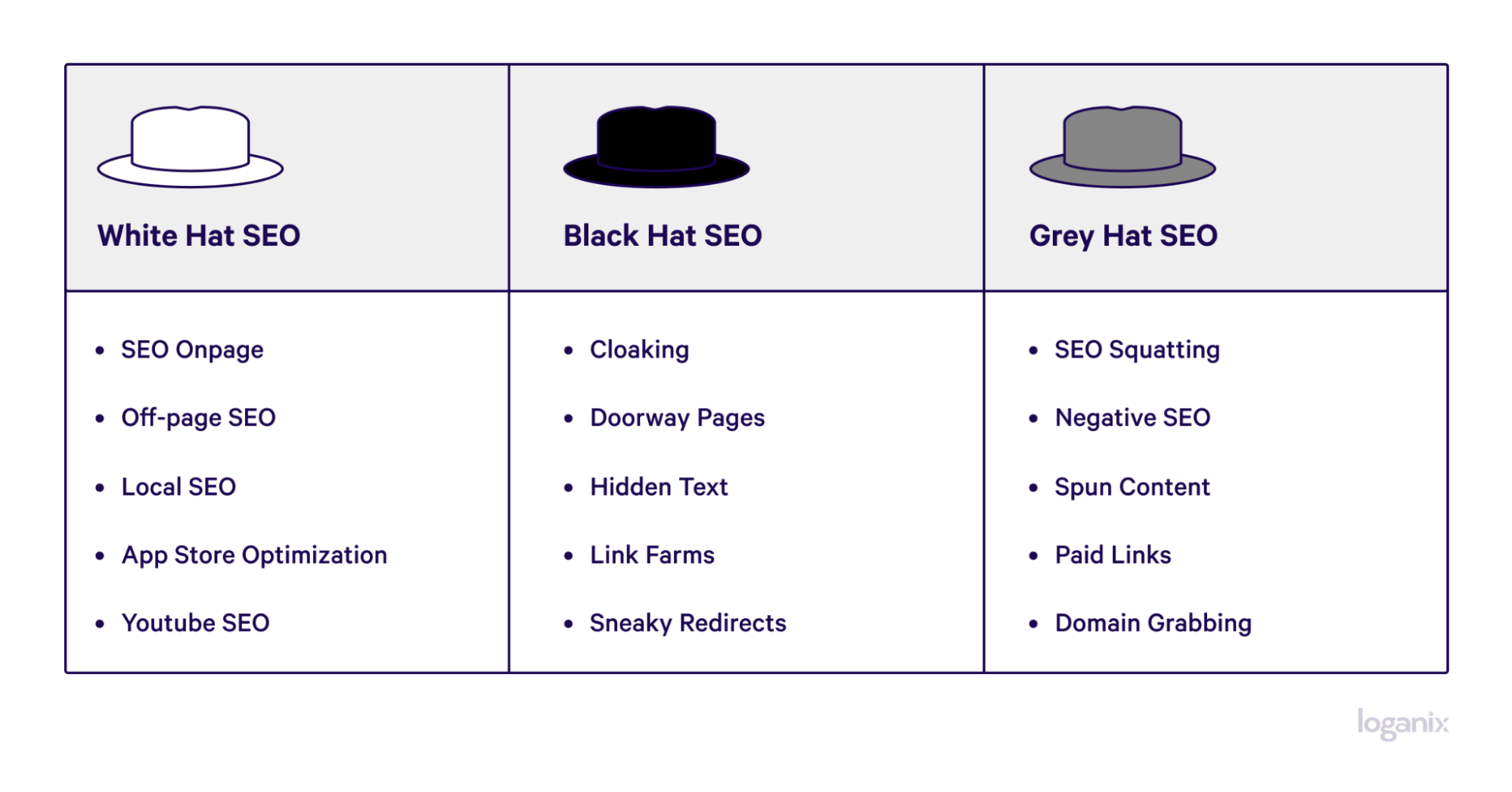

Now that we have a foundational understanding of spamdexing and its evolution, let’s delve into the two primary categories of tactics used in spamdexing: content spam and link spam.

Content Spam

Content spam involves manipulating the content of a webpage to deceive search engines. Common methods include:

- Automatically generated content includes text generated programmatically with little to no regard for quality or user experience.

- Cloaking shows different content to search engines than to users. For example, a site might display a page of text to search engines for indexing, while users see a page of images or videos.

- Doorway pages are created to rank highly for specific search queries, leading users to the same destination or to intermediate pages that are not as useful.

- Hidden text involves placing text on a page in a way that is invisible to users but readable by search engines, often by using white text on a white background or setting the font size to zero.

- Keyword stuffing involves overloading a webpage with irrelevant keywords to manipulate rankings.

- Scraped content includes taking content from other websites and republishing it without adding value.

- Thin content is pages that add little or no value, such as affiliate pages that provide no additional content or value or doorway pages created to funnel visitors to a particular site.

Link Spam

Link spam aims to manipulate search engine rankings through deceptive linking practices, such as:

- Buying expired domains, purchasing expired domains with established authority, and using them to create links to a target site.

- Excessive guest blogging and link exchange engage in excessive or low-quality guest blogging and link exchanges solely for links.

- Private Blog Networks (PBNs) are networks of websites created solely to link to a target site to boost that site’s rankings.

- User-generated links include placing links in forums, comments, or other user-generated content without editorial oversight.

- Website hacking/link injection is an unauthorized insertion of links into a compromised (hacked) website.

How Do Search Engines Detect and Penalize Spamdexing?

We’ve touched on the consequences of using spamdexing tactics—algorithmic penalties and manual actions—but what we haven’t explored exactly is which of Google’s algorithms target black hat SEO tactics.

Algorithms and Automated Systems

Some of the well-known algorithms include:

- Penguin targets websites that engage in link spamming and manipulative link-building practices.

- Panda focuses on content quality, penalizing websites with thin, low-quality, or duplicate content.

- Hummingbird aims to understand the context and intent behind search queries, helping to reduce the effectiveness of keyword stuffing.

These algorithms work together to analyze various aspects of a website, including its content, link profile, and user experience, to identify signs of spamdexing.

Prevention and Countermeasures

Let’s quickly discuss the preventive measures and best practices that webmasters can implement to protect their websites from falling into the trap of spamdexing.

Best Practices for Website Owners

- Focus on quality content so that the insights you offer are high-quality, relevant, and provide value to your users. Avoid thin content, and be sure your content genuinely addresses the needs and questions of your audience rather than simply aligning with on-page SEO best practices.

- Avoid black hat SEO tactics and practices considered manipulative or deceptive, such as keyword stuffing, hidden text, and cloaking. Stick to white hat SEO practices that align with search engine guidelines.

- Regularly audit your website to identify and fix any issues considered spamdexing. This includes checking for broken links, ensuring all content is visible and relevant, and verifying that your website’s link profile is clean and natural.

- Implement security measures to protect your website from hackers who might inject spammy content or links.

- Educate yourself and stay informed about the latest SEO best practices and updates to search engine algorithms. Kudos, you’re doing this step right now.

Learn more: SEO audit services.

Spamdexing FAQ

Q1: Why Is Keyword Stuffing a Common Spamdexing Technique?

Answer: Keyword stuffing is a prevalent spamdexing technique, as it is a straightforward and low-effort way to manipulate search engine algorithms, aiming to artificially boost a webpage’s ranking through excessive use of keywords. However, this practice leads to content that is both unnatural and difficult to read, diminishing the user experience and potentially resulting in penalties from search engines.

Q2: How Can You Report Spamdexing or Suspicious SEO Practices?

Answer: If you encounter spamdexing or suspicious SEO practices, you can report them directly to Google through the company’s “Report Quality Issues” page. Providing detailed information helps Google investigate the issue and take appropriate action to maintain the quality of search results.

Q3: How Can Users Identify and Protect Themselves from Spamdexing?

Answer: Users can protect themselves from spamdexing by being vigilant and critically evaluating websites, especially if the content appears to be low-quality, stuffed with keywords, or if the site engages in suspicious linking practices.

Conclusion and Next Steps

With today’s algorithmic sophistication, your SEO efforts should be based on building a strong foundation of quality content, ethical SEO practices, and a user-centric approach.

This is where Loganix steps in. From link building and content creation to SEO audits and strategy development, our team of experts is dedicated to implementing white-hat SEO strategies that drive organic traffic, boost user experience, and contribute to the long-term success of your website.

🚀 Take the next step towards sustainable and ethical SEO success by exploring Loganix’s SEO services. 🚀

Hand off the toughest tasks in SEO, PPC, and content without compromising quality

Explore ServicesWritten by Adam Steele on January 25, 2024

COO and Product Director at Loganix. Recovering SEO, now focused on the understanding how Loganix can make the work-lives of SEO and agency folks more enjoyable, and profitable. Writing from beautiful Vancouver, British Columbia.